AIsupportshigh-quality,human-firstcare

We put technological advances to work to create clinician-informed, responsible AI tools. Our human therapists and psychiatric providers remain at the core of everything we do, and smart and ethical use of our proprietary AI empowers them to create better experiences and outcomes.

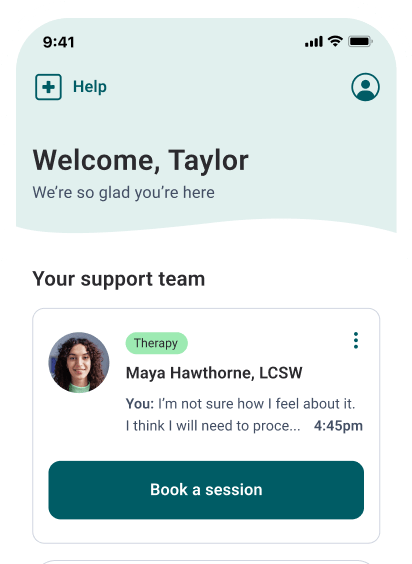

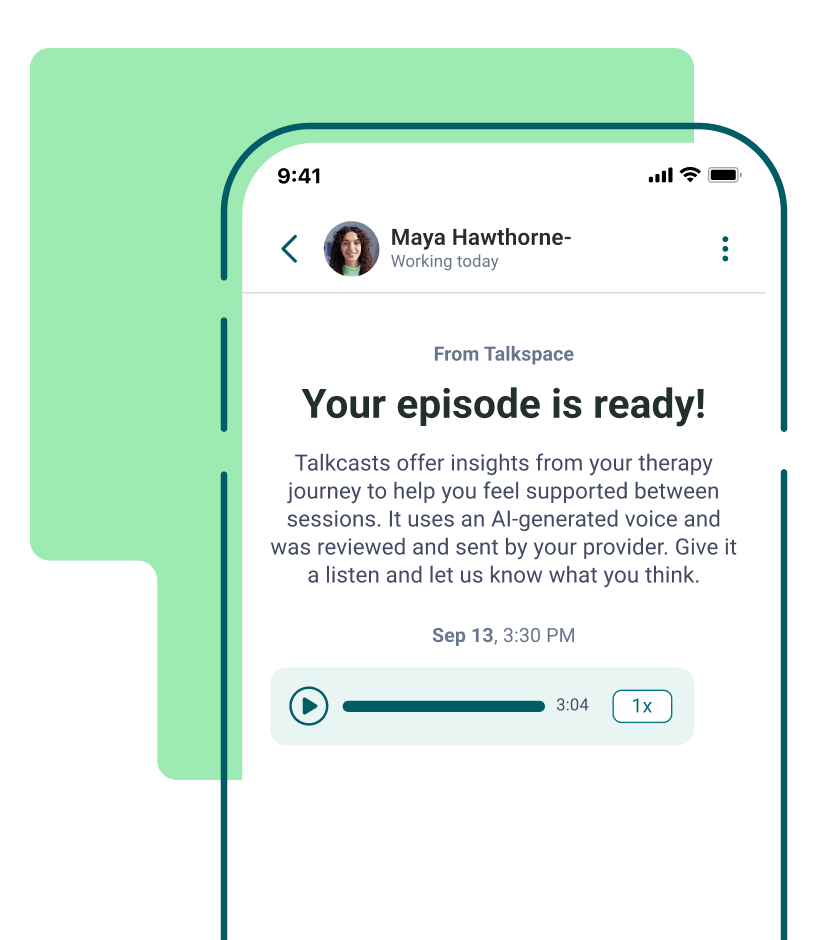

Between-session engagement

Talkcast personalized "podcasts" and tailored self-guided content support members' progress

Enhanced member safety

An automated, provider-facing alert system for detecting risk of self-harm adds a layer of safety

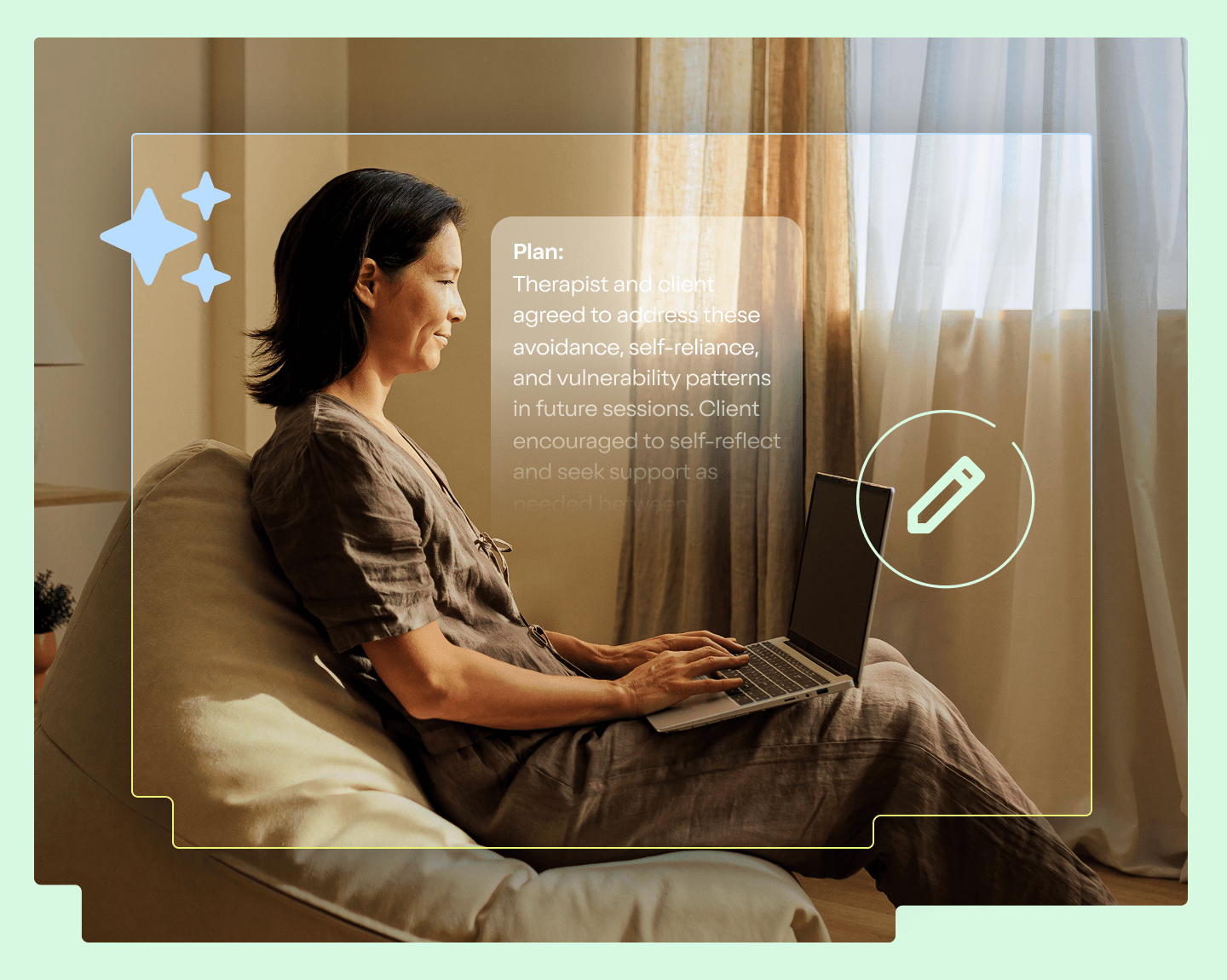

Supporting providers and clinical quality

AI-powered notes and summaries help providers deliver the most personalized care

Introducing Talkcast personalized podcasts

To help members stay engaged and working towards their mental health goals between sessions, Talkspace therapists can now create a personalized podcast for an audience of one. After the therapist reviews a script created based on the member’s therapy objectives, our AI engine generates a custom Talkcast recording for the member. They can listen to it as often as they like, whenever and wherever works for them.

"It helps me review everything that my therapist & I talked about. I really like that it outlines an exercise that I can do."

“This is great! My Talkspace clients really appreciate my personal support between sessions, and this new tool will be helpful!”

“At Talkspace, we are committed to integrating AI in ways that enhance the therapeutic experience while upholding the highest standards of clinical care and ethical responsibility. By leveraging AI and developing tools that are clinically led and ethical by design, we can continue to advance the accessibility, delivery, and quality of digital mental health care.”

Published, peer-reviewed research on Talkspace therapy

Talkspace partners with major research institutions to validate the quality of our treatment methods.

More than 60,000

5-star reviews

Read why people love using Talkspace.

See all reviews

Ozzie

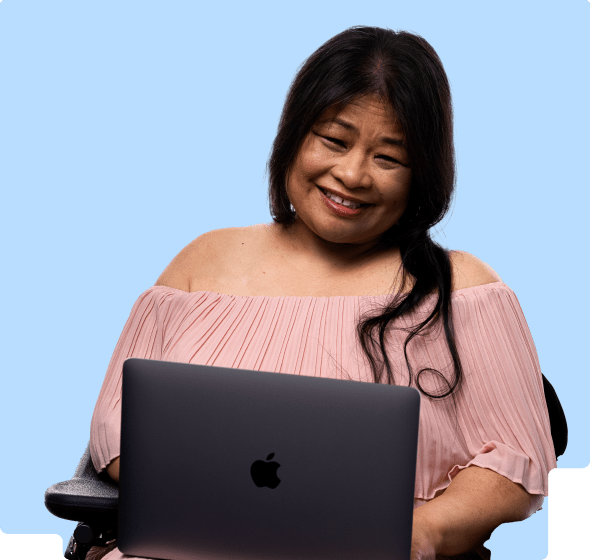

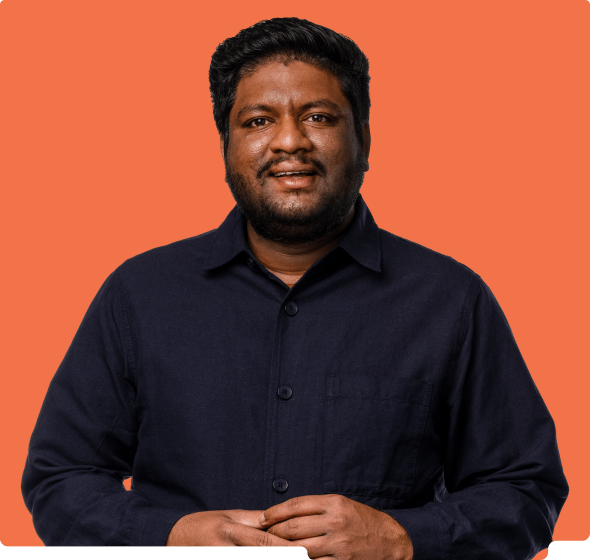

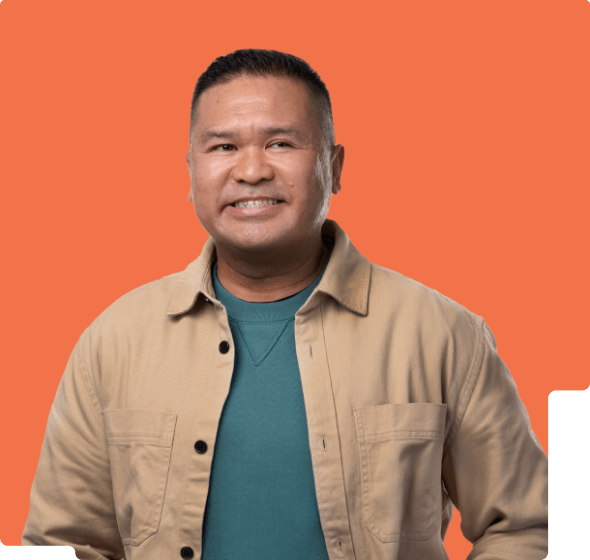

Dori

Samantha

Hari

Fatima

Diana

Melissa

Evert

April

Corisha

AI reading list

Discover resources that promote understanding of how AI is impacting therapy and mental health.

Put AI to work for your people

Talkspace’s AI Innovation Group is continuously building industry-leading, clinician-informed AI tools under the guidance of our clinicians. Interested to learn how your organization can benefit from our proprietary technology? Let’s have a conversation.

Any questions?

Find trust-worthy answers on all things mental health at Talkspace.

What is AI-supported therapy?

At Talkspace, we think of AI-supported therapy as the use of artificial intelligence to support the work of human therapists. One example: AI notes that help therapists keep detailed and accurate information about member sessions, so that they can focus only on you. Another example are Talkcast personalized podcasts, which give members specifically tailored content to listen to between sessions. At Talkspace, AI doesn’t replace human therapists, but AI is used to strengthen the human-centered therapeutic relationship.

What is the difference between a human therapist and an AI therapist?

Only human therapists possess true empathy, responsiveness, and the ability to form therapeutic relationships. Human therapists listen with sensitivity and discernment, adapt their questions and responses based on subtle cues like tone and body language, provide deeply personalized insights, and seek to understand your complex thoughts and emotions. AI “therapists” lack true emotional understanding and cannot form authentic relationships or offer the clinical expertise that experienced licensed therapists do.

How does Talkspace use AI in online therapy?

Talkspace utilizes AI-based technology to help augment the work of human licensed therapists. For example, AI-generated notes and session prep summaries help therapists provide deeply personalized care, and an AI alert system scans member messages to detect risk of self-harm and (if detected) sends the therapist an urgent alert. Talkspace also uses AI to help personalize the member experience — for example, through Talkcast AI-generated “podcasts” created for individual members to listen to between therapy sessions.

Is AI-supported therapy safe, secure, and confidential?

AI-supported therapy must comply with healthcare privacy regulations like HIPAA, message encryption, and secure data storage. Talkspace maintains robust security measures and meets or exceeds all applicable laws addressing patient privacy and data storage. At Talkspace, we are focused on safety, security, privacy, and compliance with all relevant standards such as MNIST and HIPAA.

Is it ethical to use AI for therapy?

Because AI is a rapidly evolving technology, ethical standards around its use, including standards for therapy, are still in formation. At Talkspace, however, we have developed a clear framework for ensuring that AI tools are ethical by design, meaning that they enhance the therapeutic process without causing harm. We are committed to integrating AI under the guidance of human clinicians while upholding the highest standards of clinical care and ethical responsibility.

Can AI replace a human therapist?

No, AI cannot replace human therapists. AI chatbots may be able to offer mental health support by suggesting exercises or sharing information about good mental health practices. However, an essential part of therapy is the “therapeutic relationship” between human therapist and client, something AI cannot replicate. Human therapists bring empathy, responsiveness, sensitivity, and discernment to therapy, and can provide deeply personalized interactions in a way that AI can’t. Human therapists remain essential for forming therapeutic relationships, providing personalized guidance, and treating complex mental health conditions.

Does AI make online therapy more effective?

There is not yet hard evidence that AI can make online therapy more effective, but AI tools can enhance online therapy by supporting human therapists or by creating highly personalized educational content for clients to use between sessions with their human therapist. AI is also being used in research to help identify the most effective therapeutic approaches for different conditions. It is likely that AI tools being used to support therapists will be able to improve mental health outcomes, but it is too early to say that definitively. Effectiveness will depend on proper implementation of AI tools, and integration with human care.

Is Talkspace online AI-supported therapy right for me?

If you think therapy is right for you then, yes, Talkspace AI-supported therapy is probably right for you. Talkspace provides convenient, accessible support from human licensed therapists, who may use AI tools to enhance their ability to provide the best care.

Is online therapy with AI covered by insurance?

Insurance coverage for different kinds of therapy varies by provider and plan. Talkspace online therapy is covered in-network by most major insurance plans, including Medicare and TRICARE. That means that if your insurance plan covers Talkspace we will bill your plan directly and you’ll likely only pay a copay (average $10). The AI tools used by Talkspace to support human therapists and enhance the therapeutic experience are not billed as a separate service (either to insurance companies or individuals), and are included in the regular cost of therapy.